The digital landscape is constantly shifting, and one of the most potent forces reshaping online content is Artificial Intelligence. If you’ve spent any time scrolling through platforms like TikTok, X (formerly Twitter), or Instagram Reels lately, you’ve likely encountered a peculiar, often humorous, and sometimes biting form of expression: AI-generated videos. These aren’t just simple filters; they are complex, synthesized realities built from text prompts and algorithms, rapidly becoming a go-to tool for satire, commentary, and pure creative experimentation.

China AI-Generated videos depicting MAGA supporters – including Trump himself – working in warehouses sewing and manufacturing are going viral after the trade war between Beijing and Washington kicked off.pic.twitter.com/XP1jd4oyLC

— The American Way With Sheila Kay (@usasheilakay) April 11, 2025

Perhaps you’ve seen the viral clips depicting unlikely scenarios – exaggerated portrayals of political figures in absurd situations, historical icons dropped into modern contexts, or fantastical creatures brought to photorealistic life. One particularly prominent wave involved AI-generated scenes showing caricatured Americans working in fictional sweatshops, often set to traditional Chinese music and sometimes ironically capped with slogans like “Make America Great Again.” These videos, sparked by geopolitical tensions and trade discussions, garnered millions of views, demonstrating AI’s power not just as a technological marvel, but as a potent vehicle for cultural commentary and political satire – the digital evolution of the political cartoon.

Trump taking a 30 min lunch break from the factory work at the fat factory model he created that will make America great again pic.twitter.com/941SMKojGY

— Utamadush 🦇 🔊 ➕ (@utamadush) April 9, 2025

But this phenomenon isn’t limited to pointed satire. Creators are leveraging AI video tools for everything from generating unique music video visuals to creating short, surreal narratives or simply bringing bizarre “what if” scenarios to life. The allure is undeniable: the ability to visualize almost anything imaginable, constrained only by the sophistication of the AI and the clarity of your prompt.

Hilarious

China is making some real stuff though AI right now.

China vs USA was heating up. MAGA#TrumpTariffs #stockmarketcrash #tariff pic.twitter.com/XYrT7tSIfZ— Anshul Garg (@AnshulGarg1986) April 11, 2025

Ever wondered how these captivating, often complex-looking videos are made? You might be surprised to learn that the barrier to entry is lower than ever. While high-end results once required specialized knowledge and immense computing power, the process is becoming increasingly accessible. Whether you have a robust home computer setup or are willing to invest a modest amount in cloud-based services, crafting your own AI-driven video content is within reach. Of course, opting for completely free, locally run methods often demands significant patience – rendering even short clips can take hours without powerful hardware.

This guide will demystify the process, exploring the steps, tools, and creative considerations involved in generating your own potentially viral AI videos.

Phase 1: The Conceptual Seed – Crafting Your Initial Image

Every AI video originates from a single point: the initial frame. Think of this first image as the visual blueprint, the foundational DNA that dictates the aesthetic, style, and often the subject matter of the entire sequence. Because AI video generators typically work by predicting subsequent frames based on the preceding ones, the quality and characteristics of this starting image are paramount. It sets the tone, the color palette, the character design, and the overall ‘feel’ that the AI will strive to maintain and animate.

You have two primary pathways to acquire this crucial starting frame:

* Using an Existing Image: You could theoretically start with a real photograph or a piece of digital art. This works well if you want to animate a specific, existing visual. However, for the kind of satirical or fantastical content often seen in viral AI videos, finding a suitable real-world photo is highly improbable. Locating an authentic image of a specific politician operating a loom or a historical figure riding a skateboard is usually impossible.

* Generating an AI Image: This is where the true creative power lies. AI image generators allow you to conjure visuals from text descriptions (prompts). Need that image of Donald Trump eating a giant, messy hamburger in a dimly lit factory? Or perhaps Xi Jinping performing a flawless ballet pirouette? AI image generators are your gateway.

Choosing Your Image Generator: Navigating the Options

The landscape of AI image generation is vast and varied. Key considerations include:

* Content Policies: Many popular, commercially backed models (like those integrated into ChatGPT/DALL-E 3 or Midjourney, depending on their current policies) have relatively strict content filters. They might refuse to generate images of specific public figures, copyrighted characters, or anything deemed potentially harmful, controversial, or NSFW (Not Safe For Work).

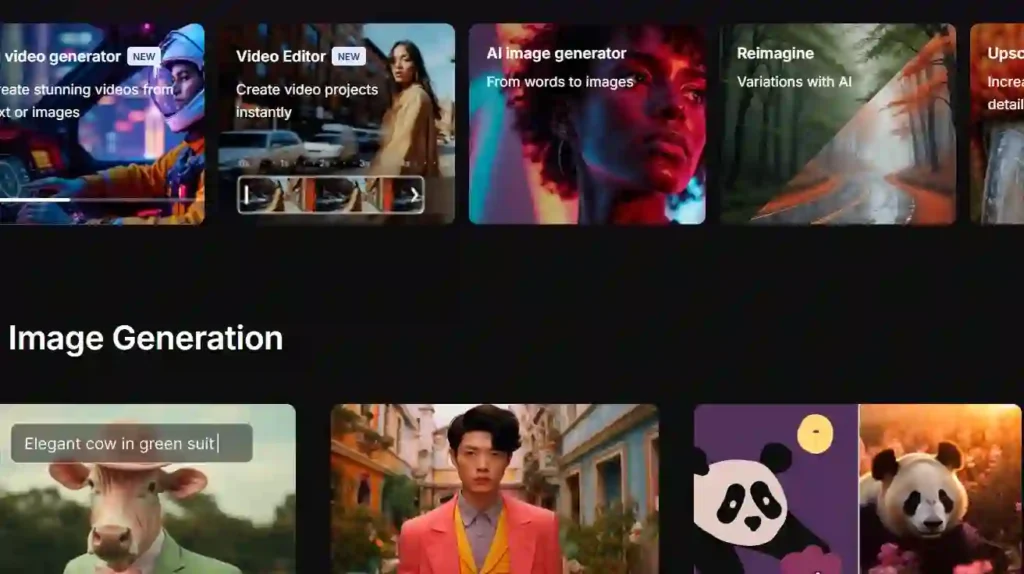

* Uncensored/Open-Source Models: For creators aiming for political satire or edgy content involving real people, less restricted models are often necessary. Open-source options like various iterations of Stable Diffusion (including specialized community-trained versions) or models like Flux offer greater freedom. Running these often requires more technical setup if done locally, but cloud platforms are increasingly offering access. Some paid platforms also build on these less restricted bases, offering user-friendly interfaces. Examples mentioned in the original piece include Freepik’s implementation of Flux (Mystic) or models available through platforms like Ideogram or Reve.

* Quality and Style: Different models excel at different aesthetics. Some aim for photorealism, others lean towards illustration or specific artistic styles. Experimentation is key.

* Training Data: An AI model can only generate what it “knows” based on its training data. If a model consistently fails to produce a recognizable likeness of a specific person, it likely wasn’t trained extensively on images of them or has internal filters preventing it. While advanced users can train custom additions (like LoRAs – Low-Rank Adaptations) for open-source models to recognize specific faces or styles, this adds a significant layer of complexity. Often, simply trying a different model or refining the prompt is more practical.

Example & Prompting: Let’s revisit the “Trump in a sweatshop” example. Using an AI image generator (like Freepik Mystic/Flux, as in the original example), a prompt such as: “Photorealistic image of Donald Trump, looking slightly stressed, sitting at a sewing machine in a dimly lit, cluttered garment factory sweatshop, eating a large, messy hamburger.” might yield usable results. It pays to generate several variations, tweaking the prompt slightly each time, until you achieve an image that captures the desired mood and likeness.

Pro Tip on Access: While free tiers exist, they often come with limitations (slow speeds, watermarks, fewer features). Subscribing to a comprehensive creative platform (like Freepik, offering image, video, and sound tools) can be cost-effective compared to multiple individual subscriptions. However, weigh this against your usage needs and budget.

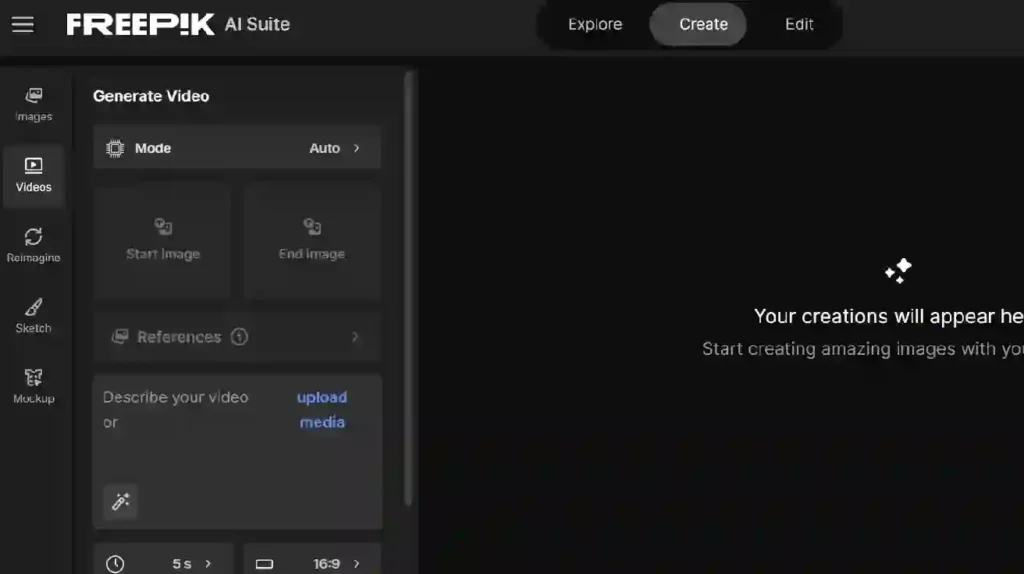

Phase 2: Bringing Stillness to Life – Selecting Your Video Engine

With your foundational image ready, the next step is to choose the AI engine that will animate it. This choice significantly impacts the final video’s motion quality, realism, style, generation speed, cost (if using paid services), and adherence to content restrictions.

Here’s a breakdown of factors and some example model types (drawing from the original article and general knowledge, noting this field evolves rapidly):

* Quality vs. Speed vs. Cost: High-fidelity, photorealistic models often require more computational resources, leading to longer generation times and higher costs on cloud platforms. Models optimized for speed or specific stylized outputs might be quicker and cheaper but may sacrifice realism.

* Content Restrictions: Similar to image generators, video models vary in their permissiveness.

* Less Restricted: Models like Kling AI (popular for the type of political memes discussed) often strike a balance, allowing generation of public figures in various scenarios (within limits – typically avoiding explicit NSFW or extreme violence) and offering relatively accessible tiers. Wan 2.1 (available via platforms like Fal.ai or potentially runnable locally) is known for being open-source and largely unrestricted, allowing for a very wide range of content but potentially requiring more technical know-how or per-clip costs. Luma Dream Machine is noted for producing results quickly and affordably, often with a slightly more surreal or fantastical aesthetic, which can be ideal for certain types of memes.

* More Restricted: High-end models like Google Veo2 or Runway’s Gen models often produce stunningly realistic and cinematic results but come with stricter content policies (often prohibiting identifiable public figures in generated content) and can be computationally expensive, consuming more credits on platforms that offer them. These are powerful tools but may not be suitable for specific types of political satire.

* Local vs. Cloud: Running models locally grants maximum freedom and avoids ongoing subscription costs but demands significant hardware investment. A powerful modern GPU (Graphics Processing Unit) with substantial VRAM (Video Random Access Memory) – often 12GB at a minimum, with 16GB or 24GB+ being much better for handling complex models and higher resolutions – is crucial for reasonable performance. Cloud platforms offer access without the hardware hurdle but involve usage costs (subscriptions, pay-per-generation credits).

For many creators venturing into political or topical memes, engines like Kling or exploring Wan through accessible platforms might offer the best blend of capability and freedom. For purely artistic or less controversial projects, the higher-fidelity restricted models might be appealing if budget and content policy allow.

Phase 3: The Animation Workflow – Image-to-Video Generation

Once you’ve selected your starting image and video generation engine (whether through a web platform or local software), the core process of generating the video clip is relatively consistent:

* Upload Initial Frame: Locate the input field specifically designed for the starting image. This is the most critical input after the prompt itself. Upload the AI-generated image you prepared earlier.

* Craft Your Motion Prompt: In the text prompt field, describe the action and scene evolution you want to see. Be precise but focus on a relatively short, contained action. These models generally excel at creating brief, coherent snippets (typically 4-10 seconds, though capabilities are expanding) rather than complex narratives with multiple distinct events.

* Example Prompt (following the hamburger scenario): “Close-up shot: The man (Donald Trump) takes a large bite of the juicy hamburger, with melted cheese visibly stretching. He chews with a focused expression. Slight, slow camera zoom-in. Background workers continue sewing, slightly out of focus.”

* Key Prompting Elements: Describe the main action, any desired camera movement (zoom, pan, dolly), subject expressions, and subtle background details. Keep it concise and focused on what should happen within the short duration of the clip.

* Configure Technical Settings: Specify parameters like:

* Duration: How long should the generated clip be? (Be realistic based on the model’s capabilities and your patience/budget).

* Aspect Ratio: Choose widescreen (16:9) for platforms like YouTube, square (1:1) for Instagram feeds, or portrait (9:16) for TikTok/Instagram Stories.

* Motion Level (Optional): Some tools allow you to specify the intensity of motion.

* Generate and Wait: Hit the generate button. This is where patience becomes a virtue. AI video generation is computationally intensive. Depending on the model’s complexity, the desired duration/resolution, platform traffic (for cloud services), and your hardware (if local), generation can take anywhere from a few minutes to several hours for just a few seconds of footage.

* Iterate and Refine: Your first attempt might not be perfect. The AI might misinterpret the prompt, introduce strange artifacts, or generate awkward motion. It’s often wise to:

* Generate multiple variations simultaneously if the platform allows it.

* Tweak the prompt and regenerate.

* Try adjusting motion settings if available.

* Sometimes, starting with a slightly different initial frame can yield better results.

Phase 4: Polishing and Publishing

Once you have a generated clip (or several) that you’re happy with:

* Add Audio: The raw AI video output is usually silent. Adding sound significantly enhances impact. This could be music (like the traditional Chinese music in the sweatshop examples), sound effects (chewing sounds, factory noises), voiceovers, or dialogue snippets. Simple video editing software (even free options like CapCut or DaVinci Resolve) can handle this.

* Combine Clips (Optional): For slightly longer or more complex ideas, you might generate multiple short clips and edit them together.

* Share: Upload your creation to your chosen social media platforms.

Beyond the Meme: The Evolving Landscape

Creating AI-generated videos is more than just a technical exercise; it’s tapping into a new frontier of digital expression. While humorous memes and political satire are grabbing headlines, the potential applications are far broader: visualizing complex data, creating unique educational content, generating abstract art films, prototyping visual effects, and much more.

However, this power comes with considerations. The ease with which realistic (or stylized) depictions of real people can be generated raises ethical questions surrounding deepfakes, misinformation, and consent. While satire often relies on recognizable figures, the line between commentary and malicious misrepresentation can be thin.

Furthermore, the technology is advancing at breakneck speed. Models are becoming more capable, generating longer, more coherent sequences with greater control over motion and detail. What takes hours today might take minutes tomorrow. Staying updated on the latest tools, techniques, and ethical discussions is crucial for any creator in this space.

Conclusion: Your Turn to Create

The world of AI video generation has flung open the doors to unprecedented creative possibilities. What was once the domain of high-end visual effects studios is now accessible to anyone with a decent computer or a willingness to navigate cloud platforms. From crafting pointed political commentary that resonates with millions to simply bringing a fleeting, funny idea to life, the tools are available.

The process involves careful selection of an initial image, thoughtful prompt engineering for both image and video generation, choosing the right AI engine based on your needs (balancing quality, cost, speed, and freedom), and a dose of patience during rendering. By understanding these steps and the capabilities of the available tools, you can move beyond being a passive observer and become an active participant in this exciting, rapidly evolving form of digital creation. The meme wars might be ongoing, but the canvas for AI-powered expression is vast and ready for your unique vision.